Learning from Untrimmed Videos: Self-Supervised Video Representation Learning with Hierarchical Consistency

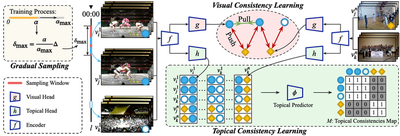

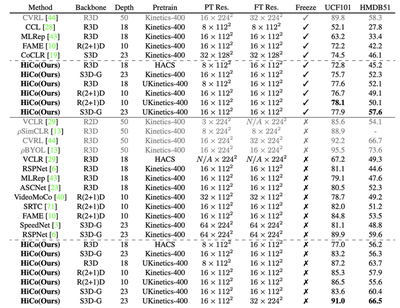

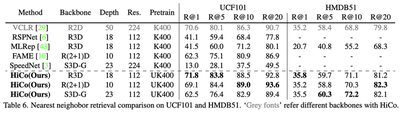

Natural videos provide rich visual contents for self-supervised learning. Yet most existing approaches for learning spatio-temporal representations rely on manually trimmed videos, leading to limited diversity in visual patterns and limited performance gain. In this work, we aim to learn representations by leveraging more abundant information in untrimmed videos. To this end, we propose to learn a hierarchy of consistencies in videos, i.e., visual consistency and topical consistency, corresponding respectively to clip pairs that tend to be visually similar when separated by a short time span and share similar topics when separated by a long time span. Specifically, a hierarchical consistency learning framework HiCo is presented, where the visually consistent pairs are encouraged to have the same representation through contrastive learning, while the topically consistent pairs are coupled through a topical classifier that distinguishes whether they are topicrelated. Further, we impose a gradual sampling algorithm for proposed hierarchical consistency learning, and demonstrate its theoretical superiority. Empirically, we show that not only HiCo can generate stronger representations on untrimmed videos, it also improves the representation quality when applied to trimmed videos. This is in contrast to standard contrastive learning that fails to learn appropriate representations from untrimmed videos.

Citation

@inproceedings{qing2022hico,

title={Learning from Untrimmed Videos: Self-Supervised Video Representation Learning with Hierarchical Consistency},

author={Qing, Zhiwu and Zhang, Shiwei and Huang, Ziyuan and Xu, Yi and Wang, Xiang and Tang, Mingqian and Gao, Changxin and Jin, Rong and Sang, Nong},

booktitle={{CVPR}},

year={2022}

}